It all started with the “Barbenheimer.”

And now, 60 days later, I’m exhausted. Brittany and I have seen 11 movies (in theater) over the past two months. After learning that movie tickets can now cost up to $20.41 (no joke), we signed up for the AMC “A list,” a subscription service where you can see up to three movies per week for a monthly fee of $21.50. Our mission, which we chose to accept, was to see as many movies as possible to get our monies’ worth out of the subscription service. With the period coming to an end, I wanted to answer three key questions about movie reviewers:

1. What’s the difference between the different rating sources?

User Based Reviews:

IMDb scores are based on a weighted-average rating of all registered users (meaning everyday people). This is supposed to give you a good idea of what normal consumers think of the movie. However not all votes carry the same weight, which was designed to prevent individuals (or groups) from rigging the rating. IMDb says they don’t disclose that calculation “to ensure [their] rating mechanism remains effective.” Like many other user-based review sites, the biggest pitfall is that most people only submit a review when they have very strong positive or negative feelings about a movie, which skews the ratings in favor of either enthusiastic supporters or strong critics.

Audience Score, by Rotten Tomatoes, is similar to IMDb in that it represents the percentage of everyday users who rated a movie or TV show positively. There isn’t much information available on how the final score is tallied or if there are any weightings. Regardless, similar to IMDb, this score is susceptible to review bombing or inflated ratings by franchise cults.

Brittany’s Ratings. Brittany is my most trusted movie companion to see all these movies. Not only do we share similar tastes, but we get to experience these movies together, which whether we admit or not, does matter. For example, the theater was freezing cold during Haunted Mansion which literally created a chilling atmosphere. Or during The Equalizer 3, the projector was out of focus for the first 45 minutes of the film, leaving us both annoyed.

Critic-based Reviews:

The Tomatometer, by Rotten Tomatoes, is a score based on the opinions of hundreds of film and television critics. It gives a quick and reliable idea of whether a movie is worth watching. However, the biggest issue with the Tomatometer is that it breaks down complex opinions into a “Yes” or “No” score, and takes the simple average. So if every critic scored a movie 2.5 of out of 4 stars, the Tomatometer would consider all of those positive reviews and give the film a 100% rating, whereas a simple average would give the movie 62.5/100.

Metacritic collects reviews from a broad range of critics and aggregates them into one “metascore.” The individual scores are averaged but somehow weighted according to a critic’s popularity, stature, and volume of reviews through a secret process. Several people still consider this the most balanced aggregate score.

2. Why are the ratings so different?

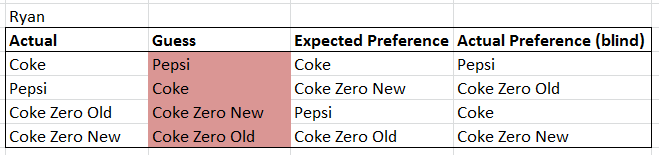

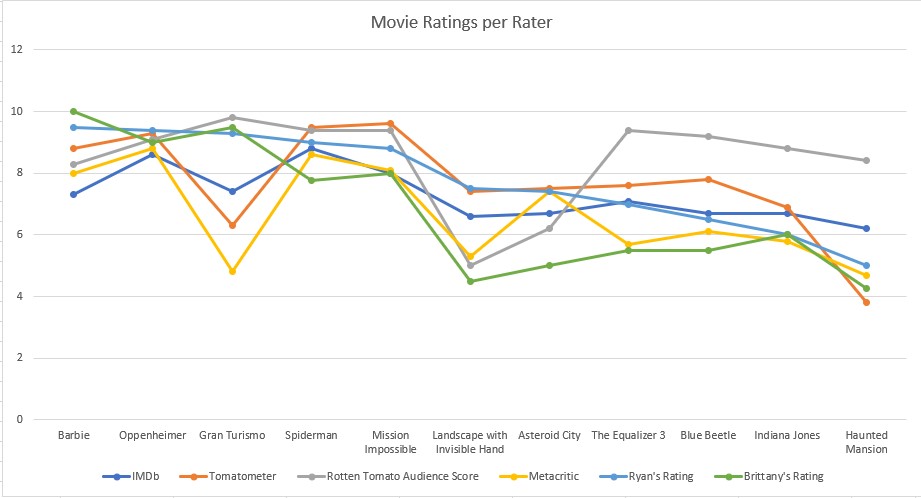

Using the 11 movies Brittany and I saw over the past 60 days, we can pull out the following takeaways:

A. User-based review sites seem more likely to be impacted by manipulation.

- Compared to a professional movie critic, individual user ratings from IMDb and “Audience Score” seem more likely (or easier) to be influenced by hype, controversy, or organized efforts to flood a score with either overly positive or negative reviews to manipulate the score.

- For example, the “Audience Score” seems particularly unreliable. It gave “Haunted Mansion” an 8.4/10, a surprisingly high rating compared to Metacritic (4.7), the Tomatometer (3.8), and my own rating (5). The film was notoriously a box office flop, only grossing $24M at the box office during opening weekend. Could Disney have paid or influenced users to leave positive reviews on the “Audience Score” to artificially inflate the movie’s score?

B. Weighted averages tend to lead to lower average scores.

- IMDb and Metacritc both openly state that their scores are subject to some sort of behind-the-scenes weighting formula, whereas the Tomatometer is based on a simple average. The “Audience Score” doesn’t say whether it’s weighted or not, so I’ll assume it’s a simple average.

- The average rating was 7.28 for IMDb and 6.66 for Metacritic; both lower than the simple averages taken from Tomatometer (7.68) and “Audience Score” (8.45). This could be because the former sites exclude (or dilute) outliers and suspicious reviews like we saw with “Haunted Mansion” in section A. This also may help explain why user-based scores for IMDB and “Audience Score” are so different; because of weighted averages.

C. Critics often rate movies lower than everyday movie goers.

- Critics and audience members often have different criteria for evaluating films. For example, critics often consider cinematography, artistic value, and other technical aspects. Audience members, on the other hand, may be more influenced by sampling basis (i.e. only going to movies they’re likely to enjoy and rate highly), herd mentality (i.e. if Brittany likes a movie I’m inclined to agree with her), or the entertainment factor (i.e. the number of explosions).

- This discrepancy was most apparent for “Gran Turismo.” Metacritic’s score of 4.8 was significantly lower than the user based reviews from the “Audience Score” (9.8) and IMDb (7.4). Brittany and I also rated the movie high at 9.3 and 9.5 respectively. On the other end of the spectrum, Metacritic rated the artistic film “Asteroid City” at 7.4, higher than IMDb (6.7) and the “Audience Score” (6.2).

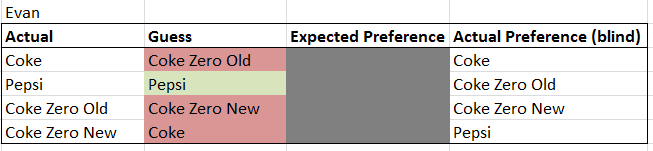

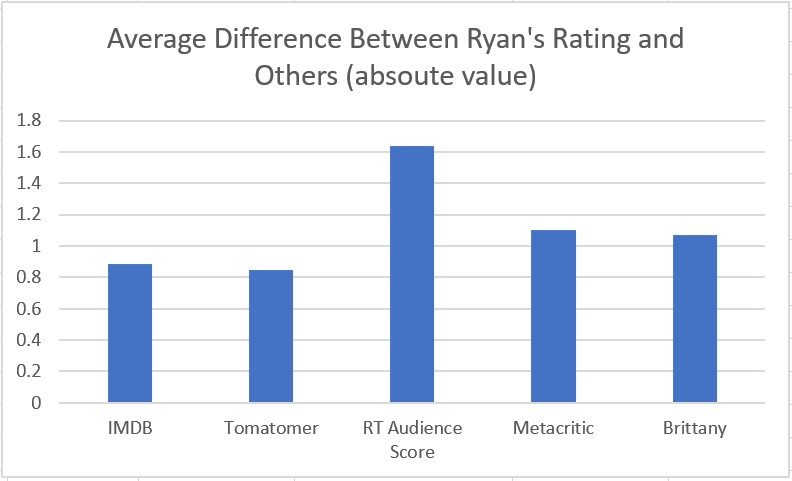

3. Which rating service most closely aligns with my own ratings?

My own ratings most closely align with the Tomatometer, however, I don’t think the Tomatometer tells the entire story by itself. I’d look to IMDb first given that A. My personal rating is more likely to align with other audience members (rather than critics) and B. IMDb appears to do a good job of sorting out outliers and manipulation.

To wrap it all up:

Ratings don’t always make sense. They can be good guides, but the magic of the cinema is largely rooted in your own personal taste and connection to the film. My favorite part of the movie-going experience has been the excitement of being in a sold out theater on opening night, always having something to talk about around the office water cooler, and having a weekly date with Brittany.

Oh and Nichole Kidman is annoying.